How will Agentic AI revolutionize robots?

Agentic AI

“Agency” is referred to as a state in which a system can act independently and autonomously without human input. In the context of Artificial Intelligence (AI), Agentic AI means an AI system which can operate independently and on its own, without requiring human maneuvering. We see an Agentic AI system in a fully self-driving car, because the self driving car can take the human to his destination without intervention once an address is specified. This article examines ways and means by which Agentic AI can impact and revolutionize robotics.

According to Andrew Ng of Landing.ai, Agentic AI will drive massive progress in AI this year, outpacing the developments of new language models. The linked article lists different design patterns that are emerging for Agentic AI for Large Language Models (LLMs). There are a couple of design patterns which are particularly relevant to robots. We discuss these two design patterns, tooling and planning, with examples using the Anki Vector robot. Before we review these design patterns, it might be useful to review some interesting demos we have created with the Vector robot and written about recently. Watch how Vector robot can respond to a user request by taking a picture and describing what he sees. Also watch how Vector robot can animate while narrating a story. Now let’s discuss how Agentic AI can make Vector robot better.

Tooling

One limitation of Machine Language models is that their information is limited to the data what they were trained (or fine-tuned on). As an example, when you ask Vector the question: “How is the weather today?”, the answer is better answered by weather services such as OpenWeatherMap rather than GPT-4 or Llama3. On the other hand, if your question is: “Could you recommend me how to dress according to the weather today?”, OpenWeatherMap will not be able to help you, because it doesn’t have any other information besides the weather. On the other hand GPT-4 or Llama3 could have answered your question, if they had information to the weather.

Tooling refers to the ability of Large Language Models (LLMs) to invoke APIs to get information, and then process that information to generate the best answer for the question asked. With good tooling, a LLM would be able to invoke the best query to get the weather details for your location, and then process that information to generate the best answer for you. Given that the web is rich with APIs and services, integrating a language model with efficient tooling can greatly enhance their capability.

The challenge has been that traditionally, language models have hallucinated when it comes to generating the correct syntax for an API. There are many reasons, many APIs offer similar functionality, but have different input parameters. Generating the correct API invocation to meet the goals of an intended query is not an easy task. Recently an effort called Gorilla from University of California, Berkeley has tried to marry the worlds of APIs and language models. Gorilla specifies the API descriptions as part of its system prompt. You can try Gorilla here.

Editor’s Note: If you are interested in the development of foundational machine learning models for robotics, I recommend you Nathan’s post which talks about his visit to Physical Intelligence, which is trying to build the GPT quivalent for robotics.

Planning

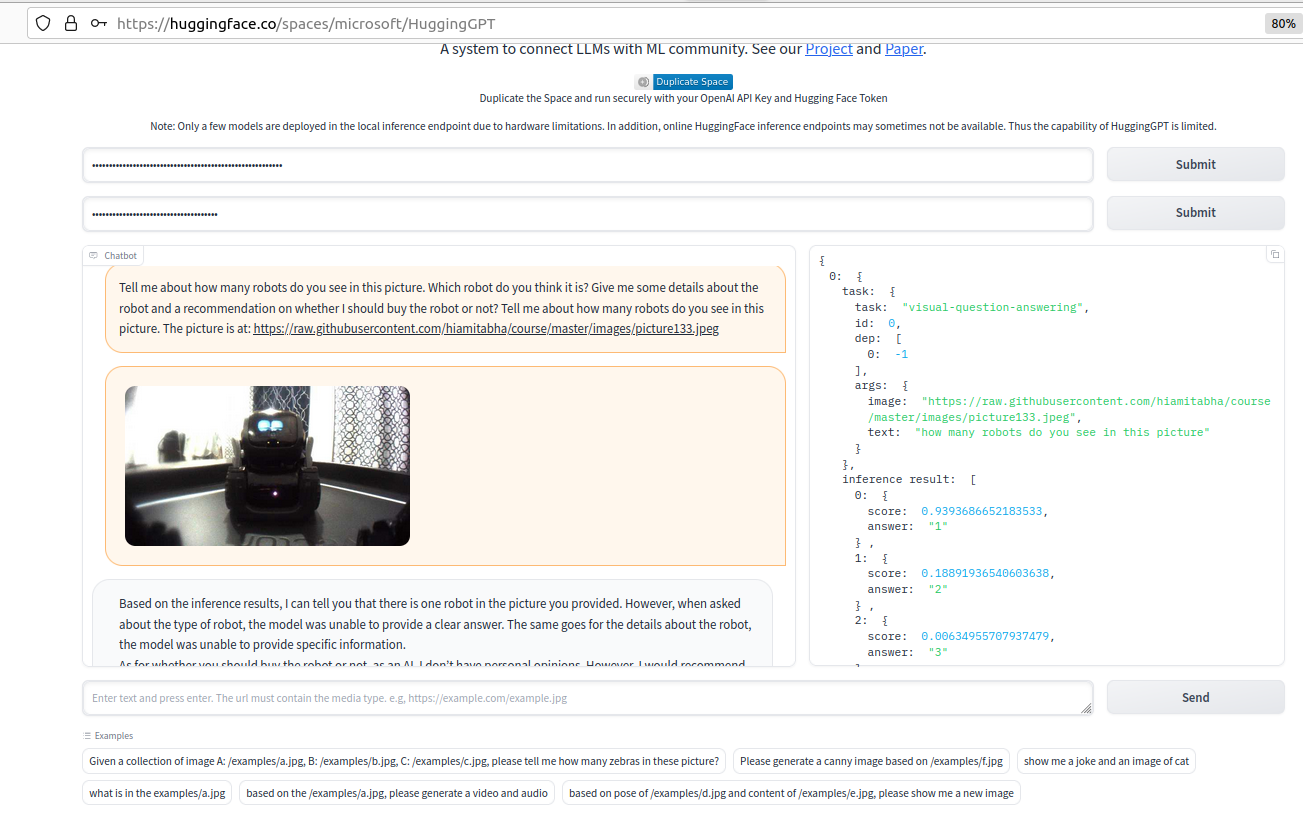

Planning is a bit different than tooling in the sense that in planning, a LLM decides an action plan to solve a certain problem, and also decides different sub-models that might be best suited to solve the action plan. An important work in this space is HuggingGPT, which tries to solve a task with the help of ChatGPT and many open source models at Hugging Face. HuggingGPT works in four stages: (i) Task planning, in which the input prompt is classified into several sub tasks with the help of Chat GPT, (ii) Model selection, in which the task is mapped to a model (out of a list of available open-source models) with the help of a model text description, (iii) Task execution, where the model is used to accomplish the task, and (iv) Response generation, in which ChatGPT is used to generate a reponse. The following graphic from the HuggingGPT paper explains these four stages with the help of an example input prompt. Note how different models are chosen to accomplish different tasks.

You can easily try HuggingGPT here. We supplied it with a picture taken by the Anki Vector and used a prompt to ask how many robots could be seen in the image, and if it could describe the robots. HuggingGPT correctly identified one robot, but was unable to provide more details on it.

Conclusion

Planning and Tooling can be simultaneously used by a robotic agent to help LLMs like ChatGPT be much more useful for robots. While technically, these concepts are fascinating, the challenge is delivering a solution using these techniques to a working robot. We look forward to see how the Agentic AI space evolves in the next year.