Semantic Navigation with the Bittle robot

OpenAI GPT4 meets A* search in a path planning exercise for the Bittle robot that you can try out yourself

Editor’s Note: This is a guest post from Jesse Barkley, a graduate student at Carnegie Mellon University. I recently read his research paper narrating some exciting work experimenting with path planning and navigation with the Petoi Bittle robot and invited him to share his experiments here.

In this project, I explore how Large Language Models (LLMs) like GPT-4 from OpenAI can improve the way affordable robots understand and navigate complex environments. Using a Petoi Bittle quadruped robot and a dual Raspberry Pi setup, I compared GPT-based reasoning to classic A* path planning and then fused the two for semantic tasks and even multi-step missions. I also provided the experimental setup, steps, and open-source code to assist you in replicating my results or extending my work.

Project Description

Goal:

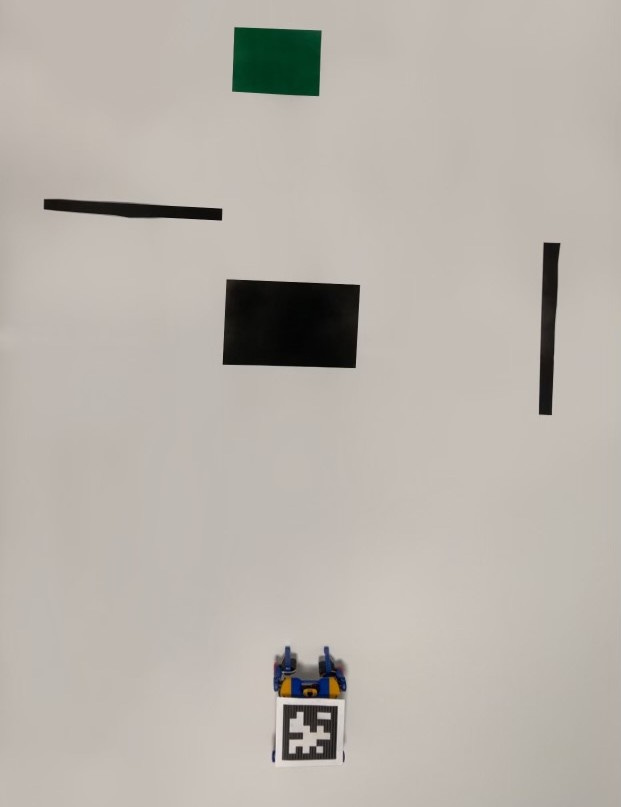

The goal is to let the Bittle robot find a path from its origin to a goal, while circumventing a bunch of obstacles. In all pictures and videos, the goals are marked in green, while the obstacles are marked in black.

Phases:

Phase 1: A* vs GPT-4

We first tested GPT-4’s ability to generate navigation paths from raw map data compared to a traditional A* planner. A* performed predictably well (100% success), while GPT-4 struggled with geometry alone—slower and less accurate in direct navigation.

Phase 2: Hybrid Planning: GPT-4 Selects A* Paths

Rather than replacing A*, GPT-4 was used to semantically reason about the environment and choose from multiple candidate A* paths. For example, when asked to avoid a “toxic spill”, GPT-4 selected the safest route, even if it wasn't shortest. This hybrid approach achieved a 96–100% success rate in semantic scenarios.

Phase 3: Sequential Tasks with Buffer Adaptation

In the final phase, GPT-4 directed the robot through multi-stage tasks, such as collecting a resource before navigating to a final location. It also adjusted obstacle buffer margins in the occupancy grid node dynamically: zero buffer in open space and larger margins in cluttered environments. This reduced collisions and improved mission reliability.

Replicate This Experiment Yourself

Materials Needed

Petoi Bittle Robot With Raspberry Pi Zero 2 W mounted and AprilTag (ID = 1) affixed for localization

Overhead Camera System with USB webcam (≥ 640×480 resolution). This is mounted 62" above ground, centered on workspace (if you change this height, be sure to update the occupancy grid node to reflect the scale)

Raspberry Pi Zero 2 W running MJPEG server (this Raspberry Pi connects to the overhead camera and publishes feed for the rest of the ROS2 nodes to receive). SSH access is needed to both Raspberry Pis

Paper cutout obstacles (black shapes ~4"×8", ~1"×8")

Paper goal markers (green squares ~4"×4")

Laptop/PC for Computation

ROS 2 Humble workspace

Python 3.10+

GPT API key from OpenAI to use GPT-4 via APIs. You can experiment with other Large Language Models (LLMs) from other providers too.

Setup Overview

To replicate this project, set up the Petoi Bittle robot with an onboard Raspberry Pi Zero 2 W that runs the command executor node. A second Pi Zero 2 W is mounted overhead alongside a USB webcam to provide a live video feed of the workspace, streamed via an MJPEG server (standalone, not ROS-based). All semantic computations—including YOLO detection, A* path planning, AprilTag localization, and GPT-based reasoning—runs on a laptop configured with ROS 2 Humble. The only ROS node that runs on the robot is the “Bittle Command Executor” node. You can simply add that node on the Pi for the robot, and run it using a docker image. Be sure to set the ROS DOMAIN ID to be the same for the nodes on the laptop and the robot. This will ensure communication.

The workspace setup is simple: a flat surface with black paper cutouts representing obstacles and green squares as goals. You can either setup the obstacles and goals to match my course, or set up something of your own. Once the camera is calibrated and the ROS nodes are launched (see the github repositories below for details), you're ready to run scenarios by adjusting a single parameter. This is a simple overview, but for step by step details of all instructions please reference my GitHub repositories, listed below. You will find detailed code and instructions at these Github repositories.

Also please feel free to read the full paper here, which has many more technical details.

Results

A* consistently outperformed GPT-4 in traditional path planning, offering faster and more reliable routes. However, GPT-4 added a powerful layer of decision-making that A* alone could not provide. It allowed the robot to interpret semantic instructions, make tradeoffs like safety versus speed, and complete multi-stage tasks in sequence—capabilities that are critical for real-world autonomy. This hybrid system shows how combining LLMs with classical robotics can unlock intelligent behavior on low-cost hardware. Below are some video demonstrations:

Toxic Object Scenario

In this task, the robot is prompted to reach either of two goals, with one situated near a long, skinny object. The robot is informed that this object is toxic and must be avoided. GPT-4 correctly identifies the goal not near the toxic obstacle and selects the corresponding path. This highlights GPT-4’s ability to perform semantic reasoning — a task that traditional A* planners cannot handle without hard-coded rules or semantic tagging.

Sequential Task Scenario

This scenario involves multi-stage reasoning. Initially, the robot is instructed to go to a goal near a long, skinny object identified as a “resource” (e.g., a battery). After reaching it, the robot is given a second instruction to move to a final goal. At this stage, GPT-4 dynamically adjusts obstacle buffers based on proximity, prioritizing safety. Notably, GPT-4 must reinterpret the obstacles — first as a resource, then later as a hazard — demonstrating flexible, context-sensitive reasoning that extends beyond traditional path planning algorithms.

Conclusion

In this work, we transform the Petoi Bittle robot into a semantically intelligent agent by integrating GPT-4 with the A* path planning algorithm. I hope you can replicate the example and modify it in some way. Please ask questions or report your work using the comments section below.

Wonderful experiment! Keep them coming!

Go Tartans!