Using Wirepod with OpenRouter.ai

A free way to integrate Vector's Knowledge Graph with Large Language Models like DeepSeek-V3. Really, no costs involved.

Openrouter.ai is one of the interesting startups that received funding in the last month. This 13 employee startup did a $40 Million Series A funding round with some of the big name Venture Capitalists: Andreessen Horowitz and Menlo Ventures, at a valuation of $500 million.

A Marketplace for Cloud Inference

Openrouter.ai does something simple extremely well, and that illustrates why it holds such a high premium in the market. The cloud based Machine Learning Inference space is very crowded with many players: OpenAI, Antropic, and Google Cloud being providers for closed source models; while open-source/open-weight models such as Llama (from Meta) and DeepSeek are served by a host of players: Together.ai, Fireworks.ai, Groq, and Sambanova to name a few. OpenRouter.ai acts as a marketplace for Machine Learning Inference… meaning it takes away the overhead for anyone having to deal with multiple cloud providers. You sign up for one openrouter.ai key, and openrouter.ai redirects your inference request according to the model specified in your request. This is a remarkable simplification when you write code, because you don’t need to think about supporting different cloud providers, providing cloud failover, or managing costs. Openrouter.ai does all that for you from one interface.

Exponential Growth

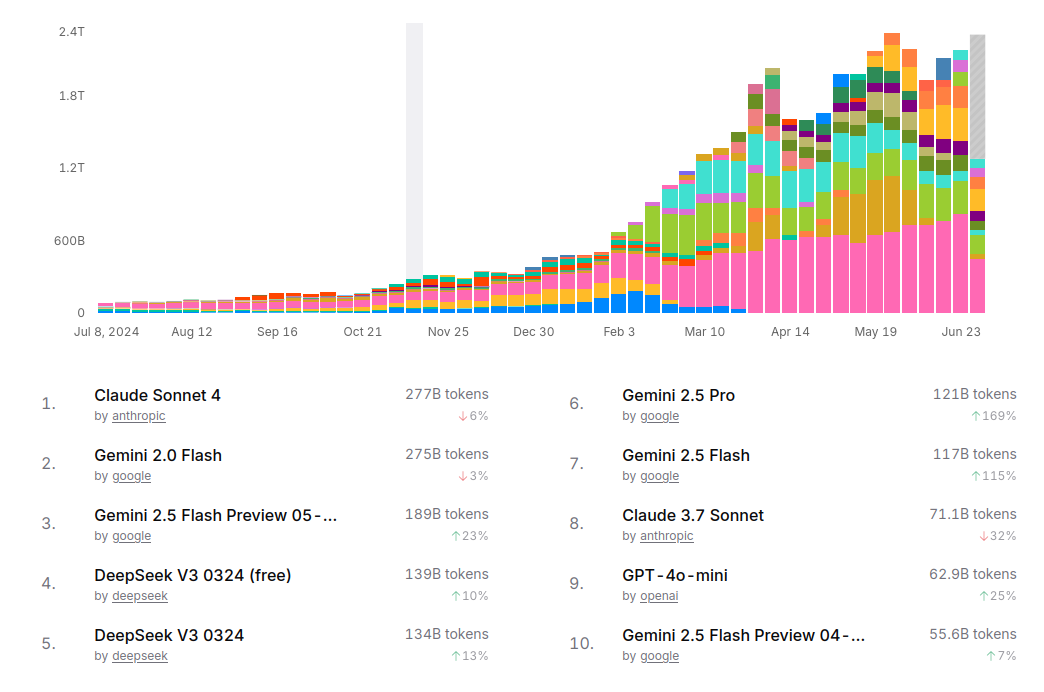

Openrouter.ai has really taken off in the Machine Learning Inference space… in just a few months, they have started processing 2 Trillion tokens every week, or close to 10 Trillion tokens a month. To provide a context, Google processes 480 Trillion tokens a month across all its services, including the behemoth Google search. Coming close to 1/50th of Google’s processing volume is no small feat. The following chart shows the growth in tokens processed. This chart also tells another story, the AI Inference space is exploding with use cases ranging from agentic AI, code completion, role play, to name a few.

Openrouter.ai on Wirepod

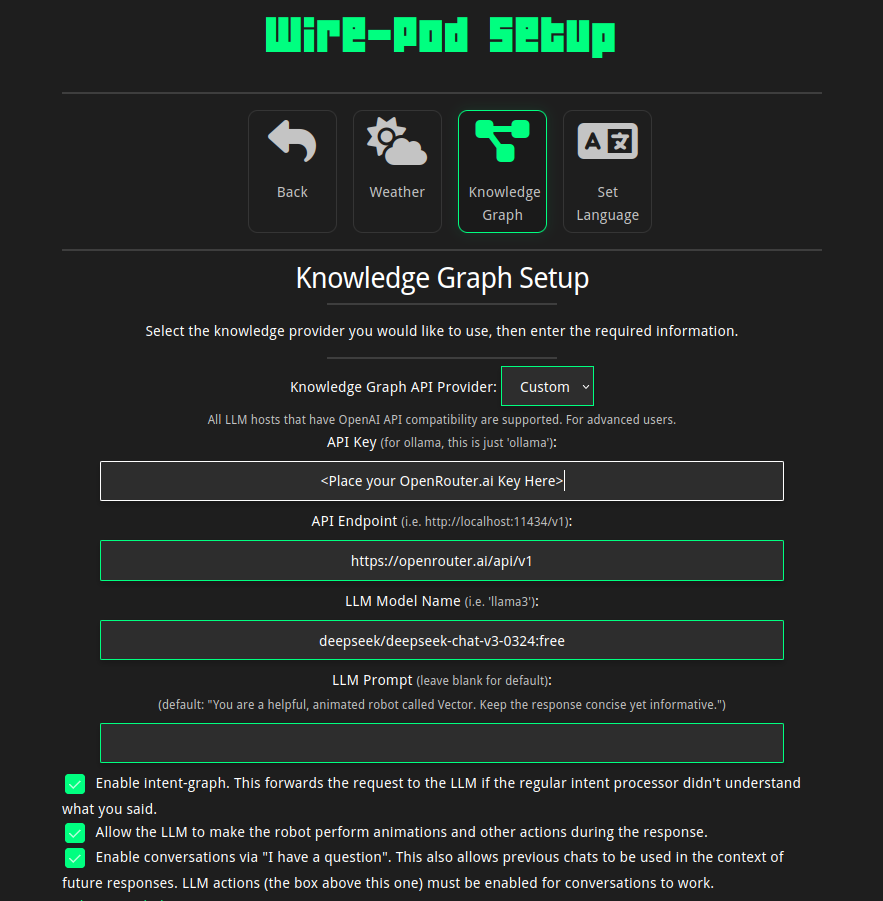

You can easily try openrouter.ai on Wirepod, and the best part is that you can try out many machine learning models for free. As an example, Openrouter.ai supports the DeepSeek V3 free version, which you can setup on Wirepod by referring to the following screen shot. (Note that I chose the free model in the LLM Model Name). You would have to get your own key from open router.ai

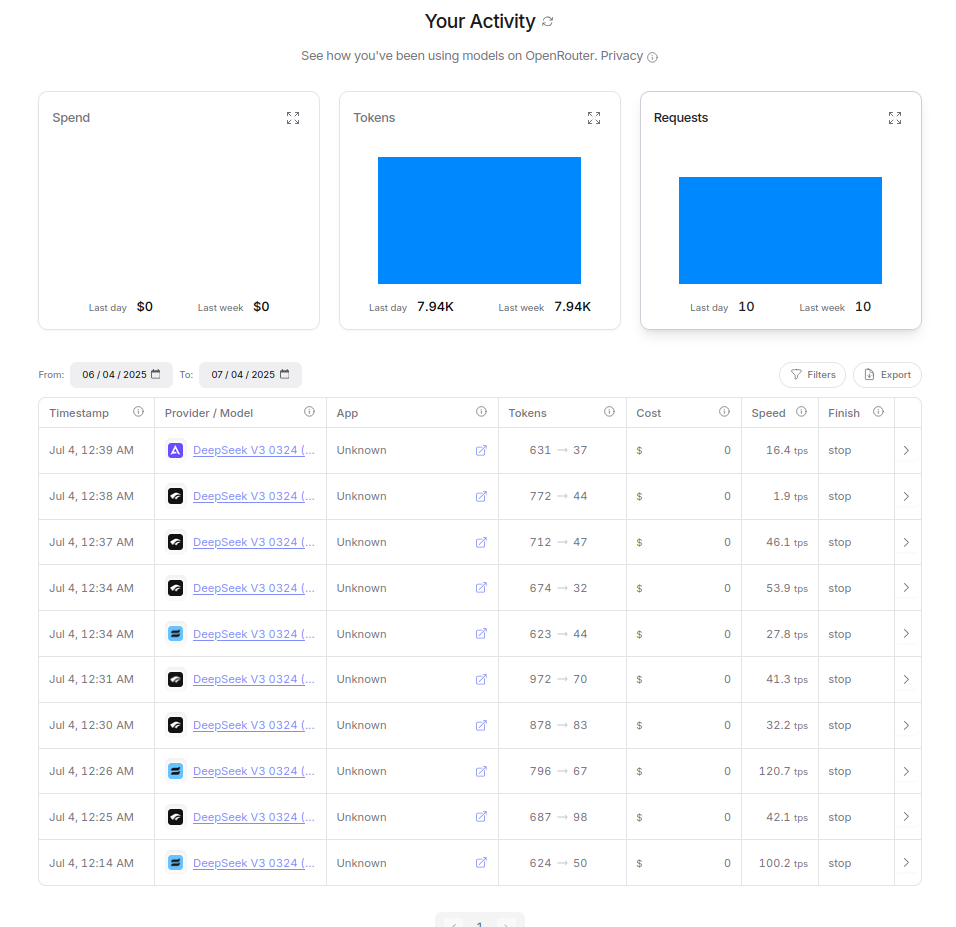

After setting this up, I can talk to Vector freely, without accruing any charges on my account. Below is a screenshot of my usage. Notice that all the costs are $0. Three different providers have been used to serve my requests. The speed has a wide range, from 1.9 tokens per second (tps) to 100.2 tokens per second (tps). At the slowest speed, I am waiting fro a bit for my Vector to robot. But hey, I don’t care as long as I get an answer, because I am using a free service.

Here is a video of me talking to Vector over this free service.

Crypto support

This part is interesting, openrouter.ai is the only service where I can convert my crypto currency into machine learning inference credits (which you would need to use a model that is not free). For me, this is an awesome feature, because I finally found valuable use for my crypto currency. Openrouter.ai seems to charge a very nominal transaction fee of 50 cents. Please refer to the following screenshot. Upon clicking purchase, I was redirected to use Coinbase Commerce to facilitate the transaction.

Conclusion

Openrouter.ai is establishing itself as a game changer in building a marketplace for Machine Learning Inference services. With the new funding available, I am sure that they will be able to leverage their position to build and support many more services. While openrouter.ai has been primarily developer oriented, their new funding round mentions that they will target to the lucrative enterprise segment in the near future.

Hope you are able to try this free service out. If you had the chance to use your Vector robot with Openrouter.ai, please post in the comments below.