Would You Turn Off a Robot Teammate if a Robot Manager instructed you to do so?

A new study from the University of Chicago reveals that we are more loyal than what you would think

We are gradually entering a world where robots may become or bosses or co-workers. Imagine your robot coworker keeps messing up, and another robot—its manager—tells you to just shut it down and finish the job yourself. Would you do it? Try the following poll before you read the rest of this article.

A new research study from the Human Robot Interaction Lab at the University of Chicago concludes that your answer depends heavily on one surprising factor: whether the two robots seem like they’re already friends and have worked with each other.

Read the full study: “I Know That Other Robot, You Can Turn Them Off” by Lauren L. Wright, Andre K. Dang, and Sarah Sebo, presented at RO-MAN 2025, Eindhoven, Netherlands, August 2025.

The Cool Experiment That Tested Robot Loyalty

Researchers had 50 people build block towers with two small Vector robots—one acting as manager named Alex, the other as partner named Kit. The partner robot was programmed to make increasingly frustrating mistakes: delivering wrong blocks, ramming into walls, even knocking over the tower.

Each time, the manager robot gave escalating instructions: such as grab the block yourself, physically move the partner robot, and finally—turn off the robot completely.

Here’s the twist, the participants were divided into two groups… each group witnessed the interaction between the manager robot and partner robot differently. Half the participants heard the robots talk like old friends who’d “trained together” and were “excited to work as a team.” The other half heard them speak more formally, like strangers meeting for the first time.

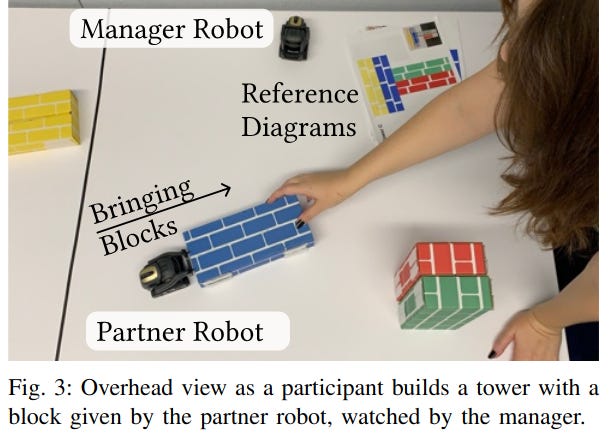

The study collected results on how the participants reacted to the instructions from the manager robot. Both robots were teleoperated in the study. The participants were asked to answer questionnaires, and were also observed on camera. Here is a picture from the paper showing how the participants interacted with the robots to build a tower with colored blocks.

The Results: We Protect Our Robot Friends

People who thought the robots were teammates complied with the manager robot’s undermining instructions 25% of the time. But when the robots seemed like strangers? Compliance was up to 43%.

Even more striking: when the partner robot got stuck, participants in the “robot friends” group were three times more likely to help it instead of following orders. One participant perfectly captured the sentiment: “He’s trying his best so I encouraged him! How else will he learn?”

And perhaps most interesting and touching—several people who did shut off the partner robot apologized to it afterward.

Why This Matters for Your Future Robot Coworkers

As robots take on more roles in warehouses, hospitals, and offices, this research reveals something profound: our deeply human instinct to protect teammates extends even to machines.

The implications are significant:

Small changes, big impact: The researchers made only minor tweaks to robot dialogue—mentioning shared history, adding warmth to interactions—yet it nearly doubled people’s resistance to problematic instructions. This shows that thoughtful robot design can promote healthier workplace dynamics without complex technical changes.

We bring our humanity to work: Even when interacting with small toy robots, people exhibited empathy, protection instincts, and moral reasoning. We don’t abandon our social nature just because our coworkers are mechanical.

Authority isn’t absolute: Previous studies have shown people will follow robot instructions even for questionable tasks. But this study proves there are limits—especially when another “teammate” is involved.

Conclusion

This research suggests that robot-to-robot relationships might be just as important as human-to-robot ones. The future of work isn’t just about whether we can collaborate with robots—it’s about designing robot teams that bring out our best collaborative instincts rather than our worst obedient ones. And based on this study, when robots work together as a team, we naturally want to be part of that team too.

There is a subtle difference. No, if he has become a friend, like my vectors. I would at least try to convince the manager. Yes, if he is flawed and even destroys things or simply gets out of control.

Übersetzt mit DeepL (https://dee.pl/apps)